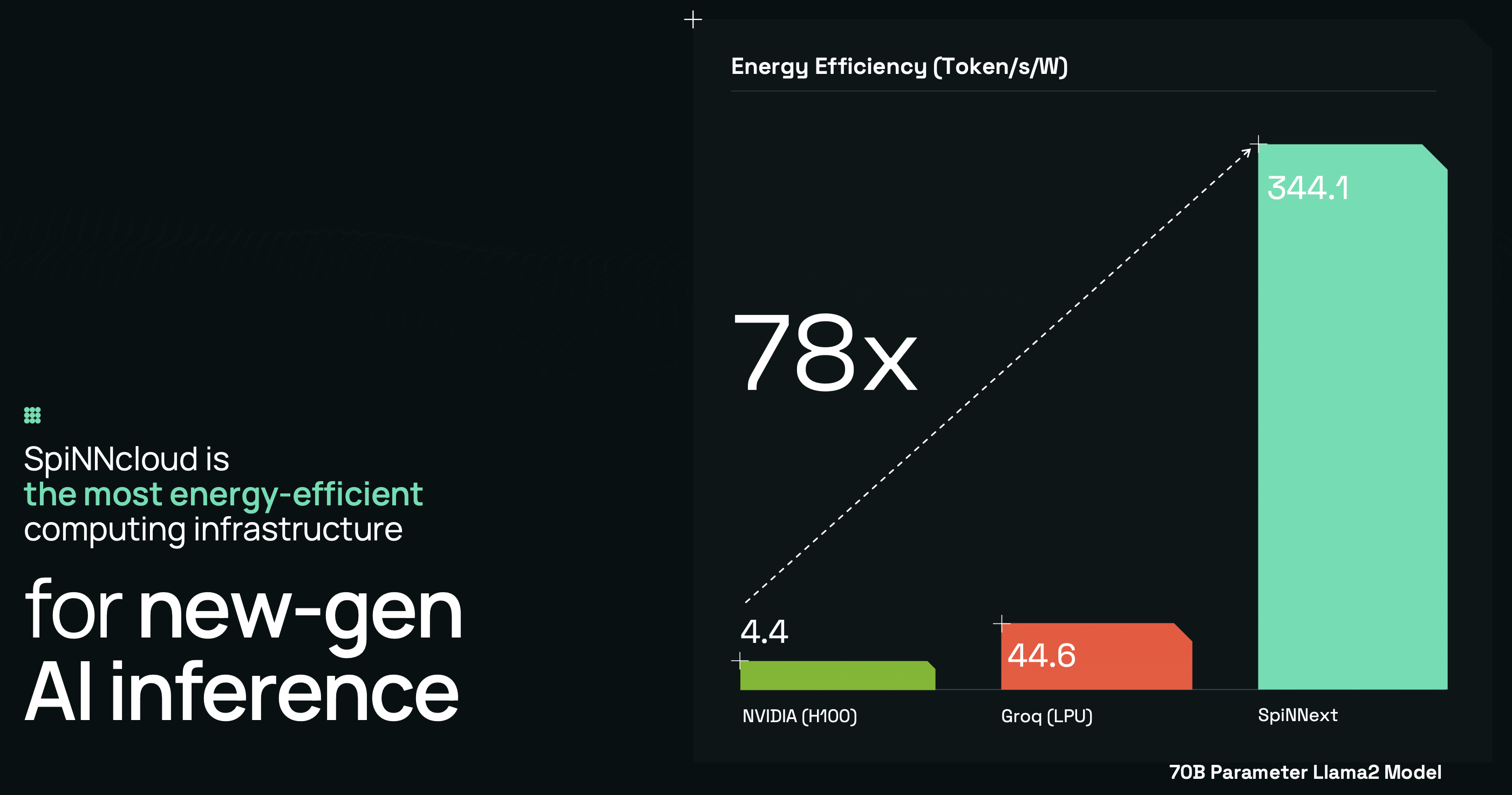

The next generation of GenAI models isn’t dense—it’s sparse. Instead of activating every layer or weight, sparse models compute only what’s needed, dynamically skipping irrelevant parts. This shift enables massive energy savings and faster inference—but only if the hardware is designed to support it.

GPUs were built for dense workloads. They waste energy and compute power on zeros. Dynamic sparsity requires a new kind of silicon.

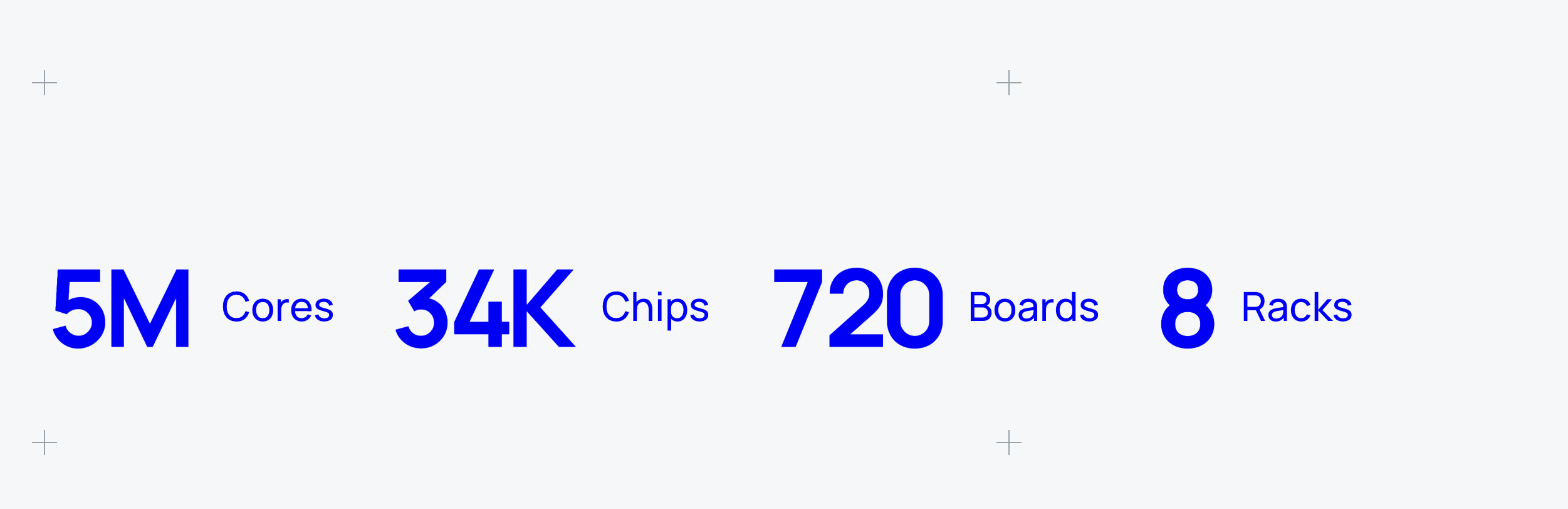

SpiNNcloud builds brain-inspired neuromorphic hardware from the ground up to run sparse GenAI workloads with unprecedented energy efficiency.

“We have reached the commercialization of neuromorphic supercomputers ahead of other companies.”

— Hector Gonzalez, Co-CEO of SpiNNcloud BBC

BBC Technology recently profiled SpiNNcloud as a potential “NVIDIA killer,” highlighting its commercial lead in neuromorphic computing:

“In May, SpiNNcloud Systems announced it will begin selling neuromorphic supercomputers…

Companies like Intel and IBM are still in R&D—SpiNNcloud is shipping hardware.”

— Zoe Corbyn, BBC Technology Reporter

The AI inference market is projected to exceed $72.6B by 2030. SpiNNcloud is one of the few companies not just chasing that market—But re-architecting how it will be won with a diverse customer base and a trajectory to reach nine figures of revenue in the coming years.

Banyan AI Fund I is investing in the seed round, ahead of a Series A.

We believe SpiNNcloud is a generational company positioned to reshape inference infrastructure. It aligns with three megatrends driving our thesis:

SpiNNcloud is led by Dr. Hector Gonzalez, a deep technologist with a decade of experience in neuromorphic engineering, energy-efficient computing, and hybrid AI. A graduate of TU Dresden, Hector previously co-led Europe’s Human Brain Project and helped drive the transition of SpiNNaker from academic prototype to commercial platform.

He’s supported by an exceptional team of scientists, engineers, and operators including co-founders from Amazon, GlobalFoundries, RacyICs, and TU Munich, and advisors like Steve Furber (inventor of the ARM CPU) and experts from DeepMind, IQM, and ETH Zurich. With over 50 employees and 30% holding PhDs, SpiNNcloud has the technical depth and execution capacity to deliver.

We invest in AI-native infrastructure that redefines what’s possible—not incremental GPU improvements. SpiNNcloud represents a full-stack rethink of inference compute, aligned with the most fundamental trend in AI today: energy-efficient, sparse computation at scale.

This is what infrastructure for the Third Wave of AI looks like.

Sam Awrabi

Founder and MD, Banyan